Maxime Basse

I'm passionate about using innovative machine learning solutions to come up with solutions to complex problems. My recent domains of interest include LLMs optimization, RAGs, GenAI and the interface between optimization and machine learning.

How to use Retrieval Augmented Generation to create Thematic Comparative analyses of french Poems?

Improved Querying

Response Reranking

RAG vs. Chatbots for retrieval

How to single out the direct causal influence of a promotion on the consumer's behavior?

Dealing with large amounts of invoice data

Two Causal Inference Approaches to determine the effect of a promotion

Training models to predict sales residues

How to define the metric of success for a startup and which features can help us forecast promising companies?

Binary vs. continuous measure of success

Engineering features about funding rounds

Model selection for best performance

How much of the compute resources should be saved for inference-time enhancing methods?

Finding Relevant Data

Mixed Integer Optimization Formulation

Stochastic Optimization through Scenarios

How to encode musical notes and learn their sequence to generate original musical pieces?

Hybrid Prescription Method: Leveraging classification and regression trees

Robustness formulation through error budget uncertainty set

Comparison of naive poit prediction, robust optimization and prescriptive average methods.

How to encode musical notes and learn their sequence to generate original musical pieces?

Defining A Musical Vocabulary

Training the Model

App Interface

Other Projects

The articles above highlight just a few of my key projects, but my work spans a wide range of other topics, which are listed below. Feel free to reach out if you're curious about any of these topics—I’d be happy to share more details or discuss them further!

Fine Tuning Large Protein Models such as ESM-3 for binding affinity predictions

Data acquisition techniques for combinatorial mutagenesis datasets involving active learning and epistasis cluster detection

Improvement over a personalized federated learning framework to speed up sonvergence and to make it robust to malicious actors sharing fake gradients

Implementation of Reed Solomon error correcting codes and visual demo of the reparation process on deteriorated black and white images

Implementation of Elliptic Curve Cryptography Method and web app showing how this security scheme is used in Bitcoin blockchain

Comparing the efficiency of convex hull algorithms for different types of points distributions and dimensions

Studying the lift and drag forces on different designs of paragliding kites both with a numerical model and with wind tunnel experiments

Exploring vulnearbilities in an image classification CNN, coming up with attack schemes to deteriorate the performance of the model or force misclassification on a specific class

About Me

I thrive at the intersection of cutting-edge technology and creative problem-solving, driven by a passion for advancing the boundaries of artificial intelligence.Ensuring that AI systems are fair, robust, and interpretable is a central theme in my work. As these technologies become pervasive in everyday life, I am committed to addressing their societal impact. My recent areas of focus include:- Optimization Methods for LLMs: Exploring techniques like quantization to reduce computational overhead and enhance model efficiency, as well as test-time strategies such as Chain of Thought reasoning to improve performance on complex tasks.

- Multi-Agent Systems: Investigating how increasingly complex modules built on the standard LLM layer can enable adaptive collaboration, decentralized decision-making, and emergent behaviors in dynamic environments.

- Mechanistic Interpretability for LLMs: Unlocking the "black box" of large models to improve transparency, trust, and alignment with human values.

- Robust Distributed Training: Developing methods to ensure reliable model performance in scenarios with distributed data collection, even under adversarial conditions.

- Retrieval-Augmented Generation (RAGs): Leveraging external knowledge sources to enhance the factual accuracy and adaptability of generative AI systems.

- Causal Inference in AI: Applying advanced statistical methods to disentangle causal relationships, particularly in domains like sales prediction and promotion effectiveness.

- Diffusion Models in Computer Vision: Exploring the transformative potential of generative models for both creative and analytical tasks in visual data processing.

- Optimization of AI Resource Allocation: Designing efficient systems for allocating computation time and resources to large-scale AI models in multi-user environments.Beyond the technical realm, I draw inspiration from outdoor sports, where I embrace the challenge and unpredictability of nature. Whether surfing, trail running, or climbing, I find these pursuits invigorate my creativity and resilience.

Retrieval Augmented Generation using a french poem dataset

Retrieval-Augmented Generation (RAG) represents a fascinating intersection of deep learning, semantic search, and language generation. By combining the context-aware capabilities of large language models (LLMs) with document retrieval systems, RAG delivers grounded, accurate responses and significantly reduces hallucination. It’s a game-changer for applications that demand both precision and creativity.In this article, I’ll walk you through my journey of building and optimizing a RAG system tailored to an unconventional dataset—over 4,800 French sonnets spanning centuries of poetic expression. Together, we’ll explore the challenges, solutions, and technical insights that shaped this experiment.

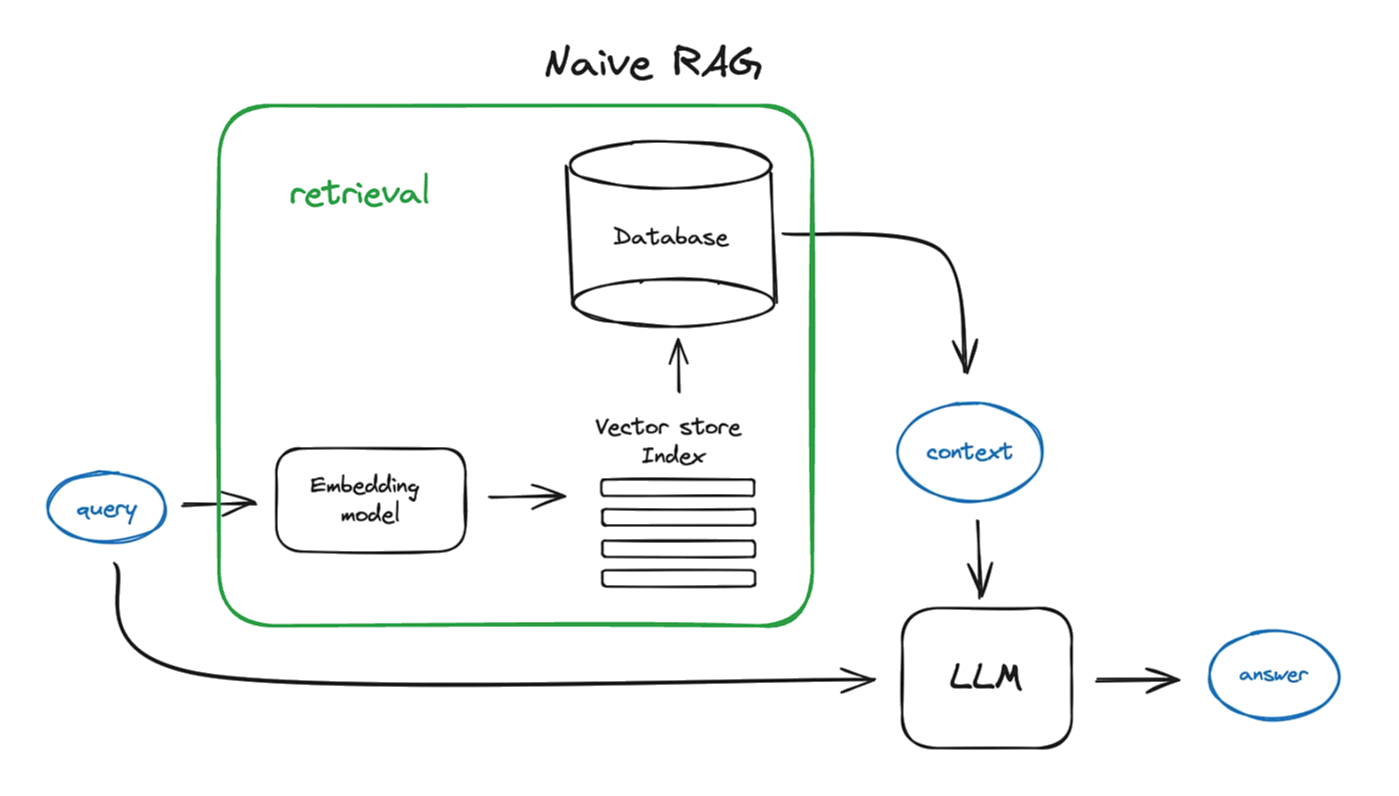

What Is RAG, and Why Is It Powerful?

At its core, RAG combines two components:Retriever: Finds relevant documents from a dataset based on the query.

Generator: Summarizes or answers questions using the retrieved documents.

The interplay of these components is a carefully orchestrated workflow, rather than an agentic system. This distinction matters because every step in RAG is predefined, with no dynamic decision-making involved. It’s a flowchart, not a free-willed agent.

The Dataset: Sonnets in XML Format

For this experiment, I worked with a large XML dataset of over 4,800 sonnets, primarily in French. My objectives were:

Thematic Retrieval: Retrieve sonnets based on thematic queries (e.g., love, seasons, sorrow).

Embedding Evaluation: Test the effectiveness of different embedding models for French texts, often containing archaic or poetic vocabulary.

Faithful Responses: Address retrieval inaccuracies and minimize hallucination in generated outputs.

Using LLamaIndex, I parsed the XML dataset to extract metadata (titles, authors, and dates) and sonnet text. Here’s the extraction process in action:

# Extracting sonnets from XML

from xml.etree import ElementTree as ET

from llama_index.core import Document# Parse XML and extract sonnets

sonnets = root.findall('.//tei:div[@type="sonnet"]', namespaces)

documents = [

Document(

text="

".join([line.text.strip() for line in sonnet.findall('.//tei:l', namespaces)]),

metadata={"title": sonnet.find('./tei:head', namespaces).text}

)

for sonnet in sonnets

]

print(f"Loaded {len(documents)} sonnets.")

By the end, 4,870 sonnets were neatly packed into LLamaIndex's Document format, ready for embedding and retrieval.

Building the Basic RAG System

Here’s how the entire process was stitched together:Embedding the Sonnets: Using HuggingFace’s embeddings, I created vector representations for each sonnet. LLamaIndex made this step seamless.Vector Storage: The embeddings were stored in a vector database (in this case, LLamaIndex's VectorStoreIndex) for efficient querying.Query Engine: I connected an OpenAI GPT-3.5 generator to the retriever to enable natural language answers from the retrieved documents.Note that two LLMs intervene art separate steps of the RAG workflow. The BAAI/bge-small-en embedding model was used to represent the semantic meaning of the documents while OpenAI's gpt3-turbo was queried with API access to process the retrieved documents and generate a final answer. The embedding model is specialized in encoding (encoder part of the transformer architecture) the meaning of our text pieces and it is used to create a vector representation of each one of the documents, whereas the generator model (decoder part of the transformer architecture) is only queried once per RAG query so we can afford a larger and more expensive model.

embedmodel = HuggingFaceEmbedding(model_name="BAAI/bge-small-en-v1.5")index = VectorStoreIndex(

documents,

embedmodel=embed_model,

showprogress=True

)index.storagecontext.persist(persistdir='./bge-smallsonnet_vectors/')llm_gpt = OpenAI(model='gpt-3.5-turbo', temperature=0.0)query_engine = index.as_query_engine(llm=llm_gpt)

This setup was sufficient for basic queries like identifying a sonnet’s author or finding poems about general topics (which could be done more efficiently with an SQL request), or finding a poem about a general topic.

. For example:

| User Query: | RAG Response: |

|---|---|

| "Find a sonnet about a frozen rive and give me the sentences that describe the river." | The theme of the sonnet 'Pâques-Fleuries' is the arrival of spring and the celebration of Easter, highlighting the awakening of nature... |

| "What is the theme of the sonnet 'Pâques-Fleuries'?" | The sonnet that describes a frozen river is "Quel froid !" by José-Maria de Heredia. The sentences that describe the river in the sonnet are: "Des larges défilés, des chemins ro... |

| "Find the author, title and content of a sonnet about computers " | Author: Not available Title: Not available Content: Not available |

In the third example, notice how the model didn't hallucinate an answer and instead was able to return an empty output for a query that it wasn't able to solve, a key strength of RAG.

Addressing Limitations of this Naive Implementation

While the initial RAG implementation was functional, it revealed several limitations that needed to be addressed to make the system more robust and user-friendly:1. Inconsistent Handling of Empty Results:In cases where no relevant sonnets were retrieved, the system lacked a consistent and user-friendly format to convey this outcome. For example, responses like "No results available" could have been expanded with additional context, such as explaining why no matches were found or suggesting alternative queries. This inconsistency could confuse users, especially in a domain like poetry, where nuanced matches are often expected.2. Propensity to Hallucinate:Although RAG generally avoids hallucination by relying on retrieved documents, the system occasionally inferred connections not grounded in the dataset. This happened when the generator attempted to "fill in the gaps" when retrieval results were sparse or lacked clear thematic matches. Such hallucinations undermined the reliability of the system, especially when users relied on it for scholarly or critical analysis of poetry.3. Simplistic Ranking of Retrieved Results:The initial implementation ranked retrieved documents solely based on cosine similarity scores from the embedding model. While this approach is straightforward, it sometimes prioritized less relevant sonnets that coincidentally shared surface-level semantic features with the query, leading to less meaningful results.

To address these issues, I focused on refining both the retrieval and generation components of the pipeline. This included introducing re-ranking strategies, custom prompts for handling empty results gracefully, and iterative testing to improve the quality and relevance of outputs.

Improved Querying

In any Retrieval-Augmented Generation (RAG) system, the querying process must be carefully tailored to the specific use case to ensure accuracy and relevance.

Balancing Depth and Precision

When working with a poetic dataset, such as a collection of sonnets, this involves fine-tuning the retrieval strategy to capture the right balance between context depth and precision. For example, increasing the number of retrieved nodes (similarity_top_k) ensures that nuanced or related poetic themes are not overlooked, but retrieving too many nodes risks introducing noise. Additionally, crafting a personalized prompt for the generative model is crucial. A well-designed prompt can guide the model to provide responses that respect the literary and stylistic elements of poetry, emphasizing structure, tone, and thematic relevance.Our final task requires to fetch exactly 2 documents that match a theme so having similarity_top_k larger than 2 is a form of reranking, where we voluntarily retrieve an excessive number of documents and later filter them down more carefully. Here the reranking is done directly by prompt engineering the generator to keep only 2 poems among the provided ones, but other methods include computing new embeddings for the query and the top_k documents using a larger and more semantically precise LLM, and then finally fetch the best cosine similarity matches in that new embedding space. That method saves compute time since expensive embeddings are only computed for a handful of documents/chunks at each query.

Re-ranking Retrieved Results:

Our final task requires to fetch exactly 2 documents that match a theme so having similarity_top_k larger than 2 is a form of reranking, where we voluntarily retrieve an excessive number of documents and later filter them down more carefully. Here the reranking is done directly by prompt engineering the generator to keep only 2 poems among the provided ones, but other methods include computing new embeddings for the query and the top_k documents using a larger and more semantically precise LLM, and then finally fetch the best cosine similarity matches in that new embedding space. That method saves compute time since expensive embeddings are only computed for a handful of documents/chunks at each query.

From Retrieval to Generation

Next I constructed a custom prompt to ask GPT3-turbo model to generate a littarary comparative analysis of the two most relevant poems found about the requested topic. This task requires a good retrieval system that fetches poems in line with the desired topic, and a good generator that produces an interesting analysis.

You are an expert of french poems who is trusted around the world. Always answer the query using the provided context information, and not prior knowledge. Context information is below.

---------------------

{contextstr}

---------------------

Given the context information and not prior knowledge please choose two poems that you think best match the theme from the query. Display both of them ENTIRELY. Then write a comparison of how they tackle a same theme differently.

Make sure that the comparison is focused on theme of {querystr} in both poems.

RAG response:

1. Title: Ivresses

Author: Jules Chopin

Date: 1895Ivresse du parfum des roses, des verveines,

Des violettes ou des chastes lilas blancs,

Ivresse de la chair aux effluves troublants,

Ivresse de l'alcool qui met le sang aux veines,

Vous êtes un doux philtre, et vos poisons latents

Sont comme une morphine à mon âme. Mes peines

Se calment sous l'effet de leurs ardeurs sereines,

Allégeant mon cerveau de ses spleens attristants.

Ivresse des douleurs et des spasmes ultimes,

Sur l'abîme infini vous bercez notre sort

Et brisez notre Vie en l'effroyable Mort.

Mais quand tu m'auras fait l'une de tes victimes,

O Mort ! en l'Au-Delà ton effort sera vain ;

Mon âme goûtera l'Ivresse du divin.2. Title: Le vin des amants

Author: Charles Baudelaire

Date: 1857Aujourd'hui l'espace est splendide !

Sans mors, sans éperons, sans bride,

Partons à cheval sur le vin

Pour un ciel féerique et divin !

Comme deux anges que torture

Une implacable calenture,

Dans le bleu cristal du matin

Suivons le mirage lointain !

Mollement balancés sur l'aile

Du tourbillon intelligent,

Dans un délire parallèle,

Ma sœur, côte à côte nageant,

Nous fuirons sans repos ni trêves

Vers le paradis de mes rêves !Comparison:

While both poems touch on the theme of alcohol and its effects, they approach it from different perspectives. "Ivresses" by Jules Chopin portrays alcohol as a soothing elixir that helps alleviate pain and sorrow, comparing it to morphine for the soul. The poem acknowledges the addictive nature of alcohol but also hints at the ultimate escape it provides from the struggles of life.On the other hand, "Le vin des amants" by Charles Baudelaire presents alcohol as a means of transcending reality and entering a fantastical realm. The poem describes a journey on the intoxicating effects of wine, where the protagonists escape into a paradise of dreams and desires. Baudelaire's poem romanticizes the allure of alcohol and the temporary relief it offers from the constraints of everyday life.

How good is Chat GPT for that same task?

When prompted with the same query, gpt-4o gives what seems like a satisfactory answer except for 3 key weaknesses:

The two poems retreived by GPT are "The Ragpickers" Wine by Charles Baudelaire and "The Wine's Lament" by Paul Verlaine. The former is a real poem and its topic is perfectly relevant for the given query. However, the latter is an hallucination since no poem with this title was written by Verlaine

GPT cannot retrieve the full text of the poems it chose, and when prompted to do so in a subsequent query, it hallucinates low quality poems (eg. "Listen to me, I am the wine...").

ChatGPT is clearly unadapted for this task, but notice that we still leveraged its reasoning capabilities in the last step of our workflow when prompting gpt3-turbo to produce a litterary analysis through the OpenAI API. In other words, RAG allows us to keep the best of both words, sticking to the reliability of a deterministic workflow to retrieve appropriate documents, while levraging the stochastic and creative nature of LLMs during generation.

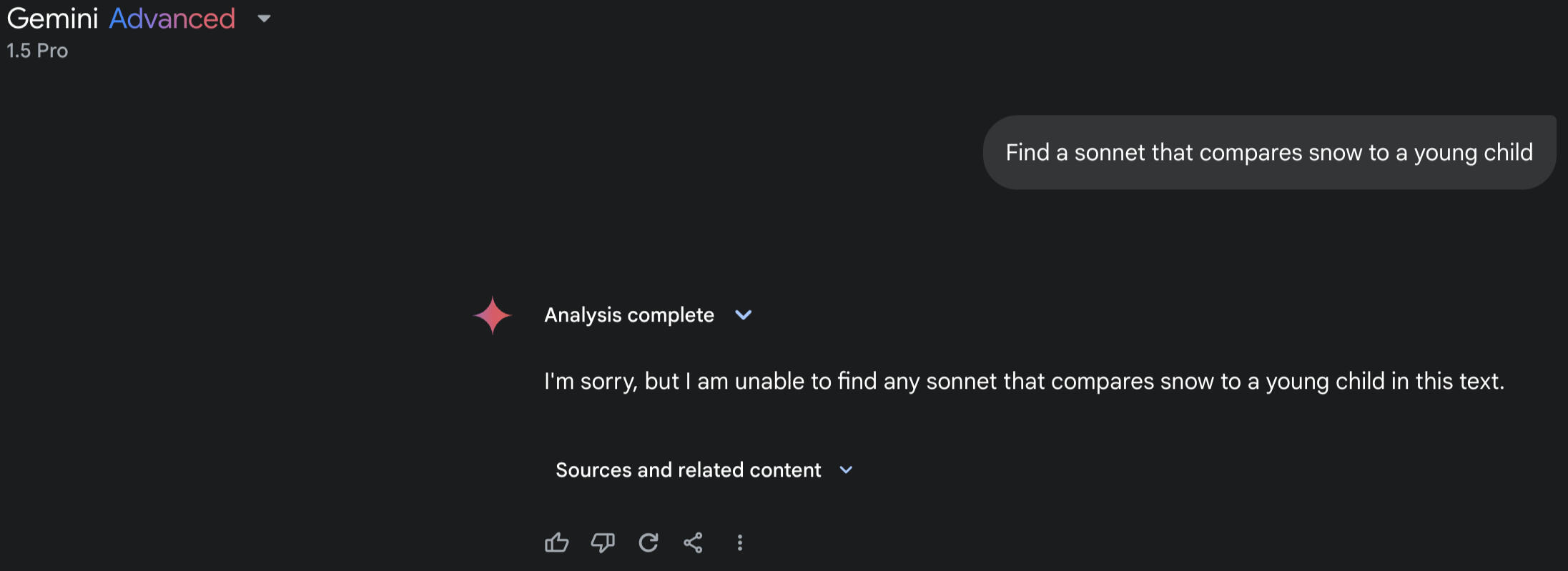

Is RAG really needed here?

Modern chatbots have become very competitive in the field of information retrieval, with newer versions of gpt that create less hallucinations thanks to methods like RLHF and Instruction Tuning that happen after the model's pre-training on raw data.Google's Gemini 1.5 pro is available online and offers the user a massive context window of 1 million tokens.

Our toy poem dataset is quite small and fits contains 1,065,254 tokens (as computed with the base-bert-uncased tokenizer) so we could practically fit the entirety of the dataset in a Gemini prompt and let the LLM fetch the proper information with its attention mechanism instead of building a RAG pipeline.I tried this method with a Pro access to Google's Gemini 1.5 model and it successfully answered simple queries such as finding the author of a specific sonnet, but failed on more semantically involved tasks, as shown in the screenshot below. What's more, it systematically took the LLM more than 30 seconds to process the extremely long prompt it was given, resulting in an extremely inefficient per-question cost compared to our RAG approach that only queries OpenAI's API once, with a much much shorter prompt (less than 1,000 tokens vs. 1 million)

This experiment teaches us that querying a LLM with a very large context in the prompt suffers from two major drawbacks:1. It requires much more compute resources to produce an answer since attention needs to be computed between each of the tokens in the context

2. Performance may be disminished compared to a RAG workflow because of phenomena called lost-in-the-middle where the beginning and end of the prompt tend to have more weight in the model's output, and documents in the middle can be overlooked.

Final Thoughts

This project applied RAG to a dataset of poems, trying to retrieve poems that match a specific theme. The accuracy of the retrieval is not obvious to compute in such an example since multiple good matches may be found. However, the experiments above demonstrated how even a simple retrieval system solves many of the hallucination and context length issues that simple LLM chatbots face.RAG is a trendy topic which recieves great attention from researchers and each week, interesting engineering tricks to make the retrieval faster or more accurate are developped.This project featured an end-to-end RAG pipeline, from data formatting, to indexing, retrieval and generation. Some topics that were left out include

Chunking considerations. Since our documents are poems that all fit in the context length. In some other application, one might need to chunk a large file into subparts, and to include relevant context about the main file in each subpoart.

Summarization. We are able to concatenate 5 or more documents to the prompt of the generation model without the need for compressing techniques.

RAG evaluation. Our evaluations were manual and qualitative in nature. We also compared the output from a RAG from that of a chatbot such as GPT or Gemini. When developping a RAG system for a large scale project where retrieving the correct document is crucial, evaluation techniques such as LLM-assisted question generation can be used.

What’s next? I’m diving deeper into re-ranking algorithms to better control how retrieved documents influence generation. Stay tuned for more experiments, probably about RAG evaluation, and let me know—how are you using RAG in your work?Happy experimenting! 🌟

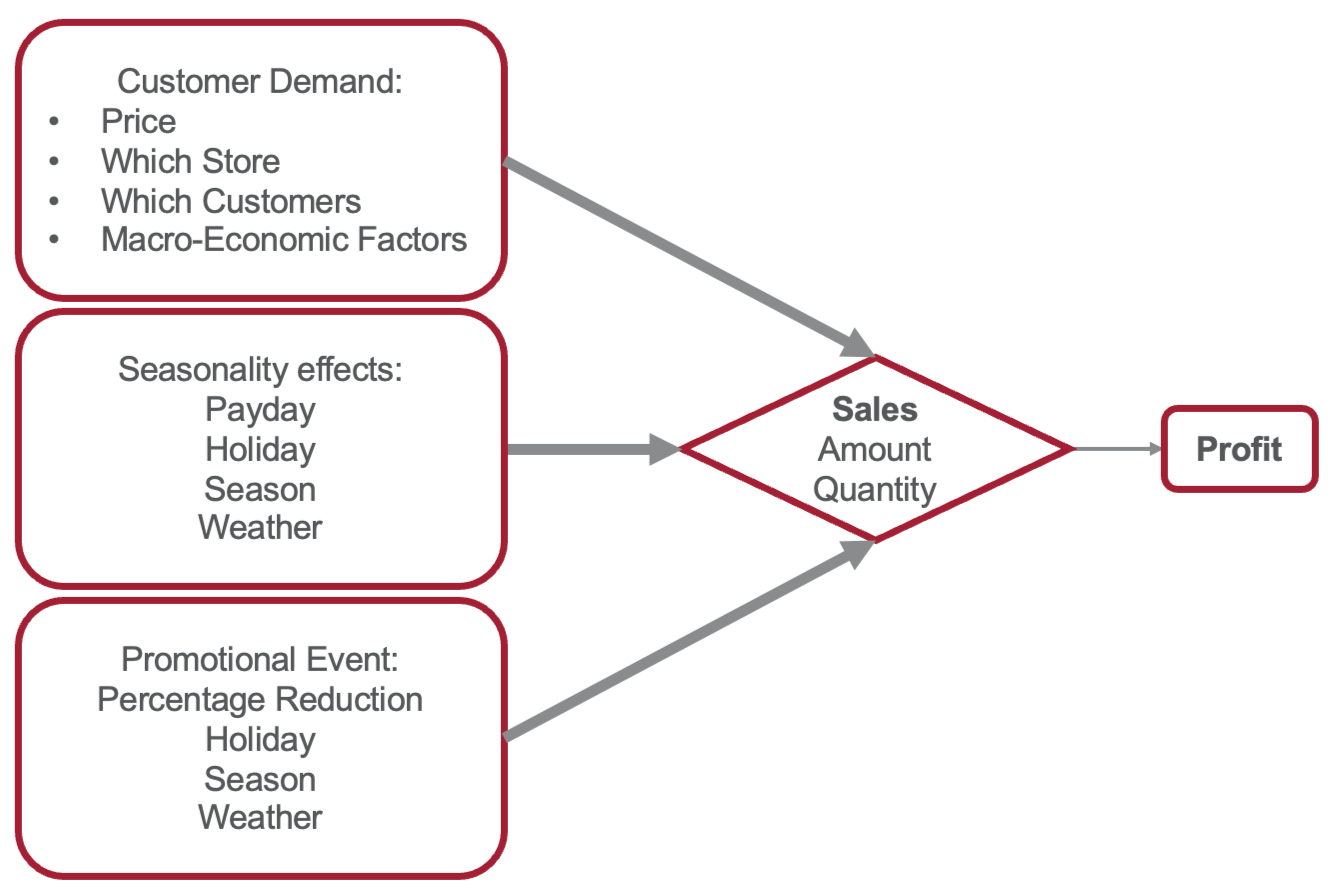

Unlocking Promotional Insights with Causal Inference: A Deep Dive into Retail Sales Analytics

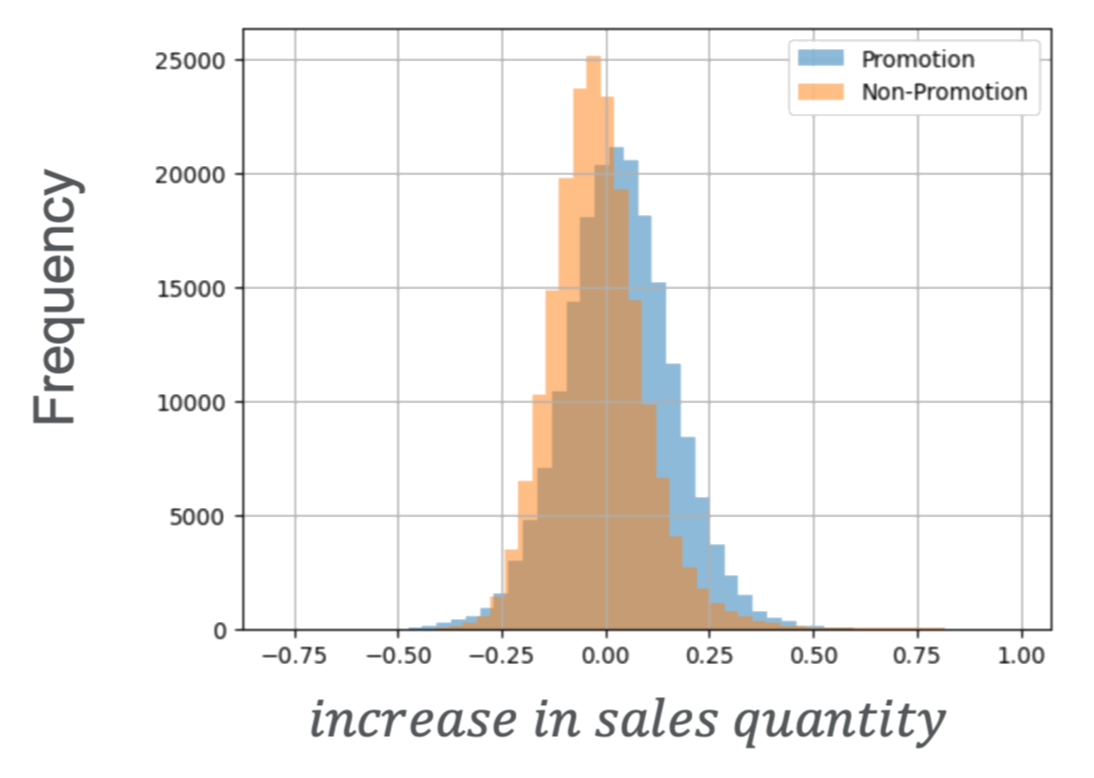

Promotions are everywhere—from the laundry detergent aisle to your favorite online store. But how do we truly understand their impact on sales? This is not just a matter of counting sales spikes; it’s about isolating the causal effect of promotions on consumer behavior. In this post, I’ll walk you through how we approached this problem in a retail context using causal inference tools, with a focus on how we structured our data, tackled seasonality, and used advanced frameworks to match samples across years.

Structuring Data for Insightful Analysis

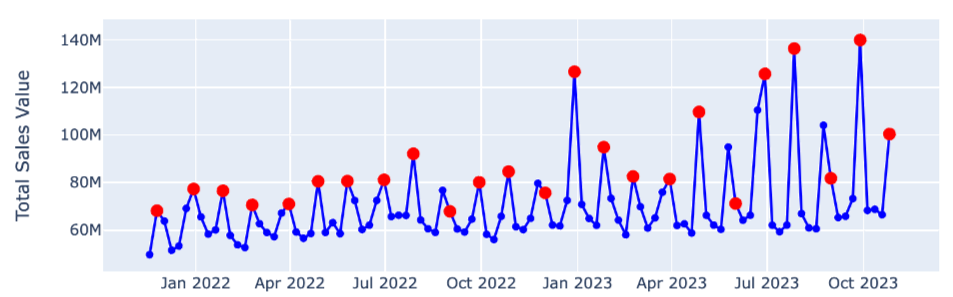

The starting point of our analysis was large-scale transactional data from a retail chain. This data included over two years of detailed records spanning thousands of products across hundreds of stores. To make this complex dataset manageable, we aggregated the data at the week-product-store level. Why weekly? Weekly aggregation strikes a balance: it captures meaningful sales trends while smoothing out daily noise, such as random fluctuations or outliers.Each entry in our dataset indicates whether a promotion was active for a specific product in a given store during a particular week. This setup allowed us to easily compare sales trends between promotional and non-promotional weeks, laying the foundation for causal analysis.

Tackling Seasonality: A First Attempt

Retail data often dances to the rhythm of seasons, holidays, and paydays. To avoid conflating these patterns with promotional effects, we needed to correct for seasonality. Our initial approach was straightforward: compute aggregate weekly sales trends across all products and stores. By normalizing individual sales against these global trends, we created a baseline that approximates what sales might have looked like without promotions.While this approach provided some insights, it had limitations. For example, it assumed all products were affected by seasonality in the same way, which isn’t always true. That’s where causal inference frameworks became essential.

In the above figure represents the aggregate sale values over all products. Considering all products at once corrects for noise in single product sales and gives us an overview of the seasonality patterns for the store.In our example, the most notable seasonal effect is a monthly recurrence where sales are higher during payday weeks (marked with the red dots).

Why Causal Inference Matters

Traditional predictive models can tell us what might happen under certain conditions, but they often fall short of explaining why it happens. Causal inference bridges this gap, providing tools to build counterfactual scenarios and estimate the direct effect of interventions, such as promotions.Imagine that promotional events are not evenly distributed throughout time and that they are often launched during payday week. Then seaonality becomes a confusing factor since we can't directly be sure that the increase in sales we observe on those weeks is a consequence of promotions occurring.By framing our problem as a Contextual Average Treatment Effect (CATE) estimation, we explicitly modeled the causal relationship between promotions and sales. This allowed us to move beyond correlations and provide actionable insights that managers can trust.

Moving Toward Contextualized Insights

The richness of causal inference lies in its ability to go beyond averages and uncover nuanced patterns. For instance, we found that some products performed better under specific promotional mechanisms, such as percentage discounts versus bundle offers. To capture these nuances, we incorporated contextual features into our models, such as store type, regional demographics, and even weather patterns during the promotional period.This approach also enabled us to estimate the heterogeneous treatment effects (HTE) of promotions, identifying subgroups of products or stores that were particularly responsive. These insights can guide more targeted promotional strategies, maximizing impact where it matters most.

Lessons Learned and Next Steps

Data structure is key: Aggregating at the right level (weekly in our case), choosing the relevant features, enriching the dataset with product and store information simplifies analysis and ensures meaningful comparisons.

Seasonality is complex : Global baselines are helpful to get a sense of the trends in the dataset but insufficient to achieve good predictions; causal frameworks that match samples across years provide a more accurate picture.

Heterogeneity matters: Not all promotions are created equal. They differ in their scope, duration and scale. We adapted a binary treatment effect method to fit our heterogeneous promotions.

My next steps include exploring propensity score methods and inverse probability weighting (IPTW) to further refine the model. I also aim to incorporate dynamic decision-making frameworks to optimize promotions in real-time.

Predicting Startup Success: A Data-Driven Approach to Venture Capital Decisions

Startups are inherently risky ventures, with only a small fraction achieving the kind of success that attracts significant returns for investors. This begs the question: can we predict which startups will succeed using data? By analyzing a startup's funding history, growth trajectory, and descriptive attributes, can we identify key patterns that signal future success? These questions lie at the heart of our work, which combines advanced machine learning techniques with robust optimization frameworks to tackle two interconnected problems:

1. Predicting startup success (both binary and continuous).

2. Optimizing investment strategies based on these predictions.In this post, we’ll focus on the first part of our work: defining and predicting startup success. A follow-up post will dive into the prescriptive phase, where we design and evaluate portfolio strategies based on these predictions.

The Challenge: Defining Startup Success

Success is a slippery concept when it comes to startups. The literature typically defines it in binary terms, where success is indicated by:

- An acquisition,

- An Initial Public Offering (IPO), or

- A significant funding round (e.g., Series C or later).While this approach is useful for classification, it misses the nuances of varying levels of success. For example, two startups might achieve acquisitions, but the valuation growth of one might far outstrip the other.To address this, we adopt a hybrid approach:

1. Binary Success: Whether the startup achieves a major milestone (acquisition, IPO, or Series C) within five years of its Series B round.

2. Continuous Success: Valuation growth multiple over the five years following Series B. This provides a measure of how much a startup grows in financial terms.

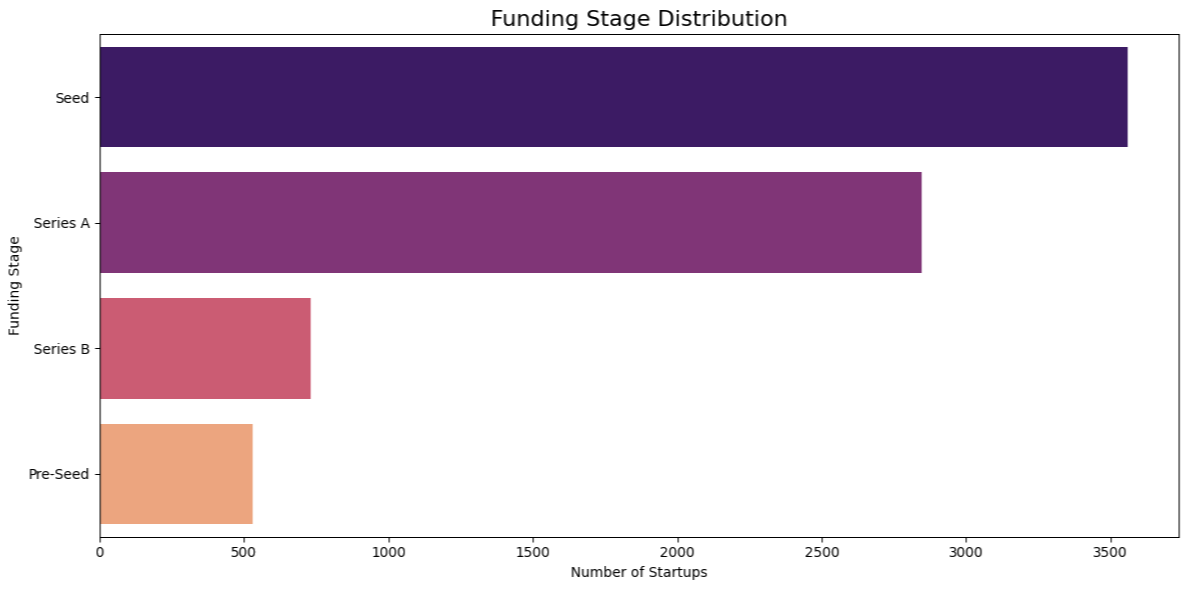

Why Focus on Series B Startups?

Startups that reach Series B funding represent a critical stage in their lifecycle. They have moved beyond the high-risk seed and Series A rounds but still have substantial growth potential. By focusing on these mid-maturity startups, we ensure:

- Sufficient historical data (seed and Series A rounds) to inform predictions.

- A meaningful five-year evaluation window for success.

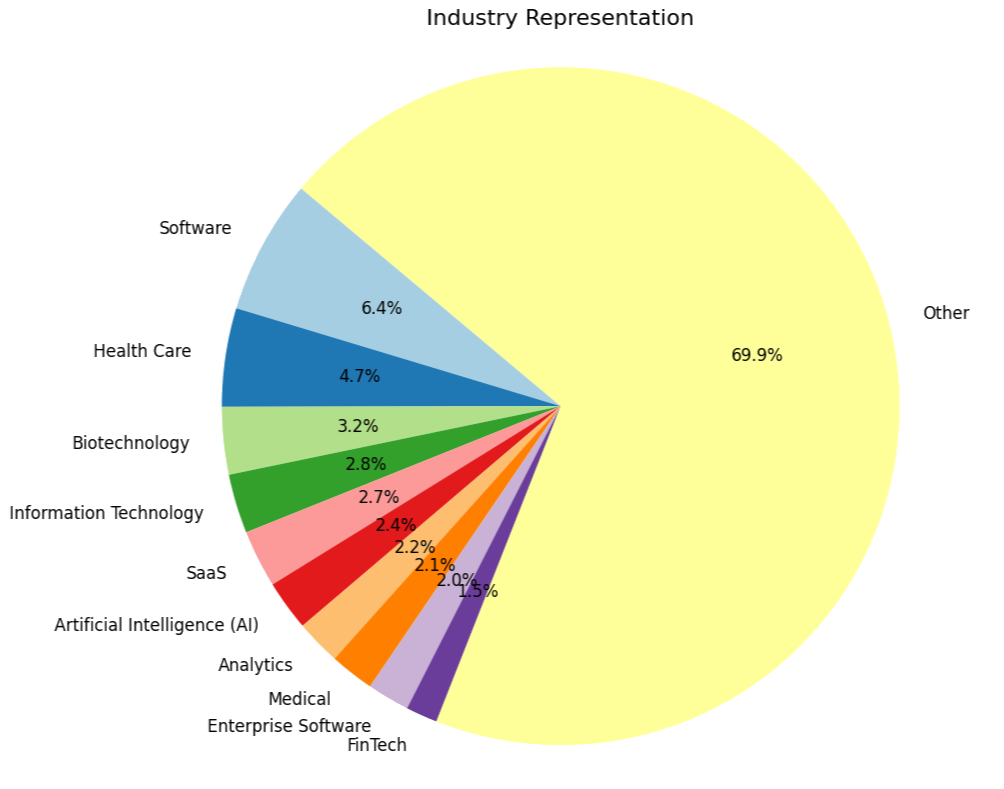

Data Collection

We collected data for over 10,000 U.S.-based startups founded between 2008 and 2019 using the Crunchbase API. To ensure comprehensive coverage, we manually scraped financial data, capturing features such as:

- Funding amounts and rounds,

- Founder counts,

- Industry group (e.g., AI, biotechnology),

- Headquarters region, and

- Descriptions of the startups.Limiting the dataset to startups founded before 2019 ensures at least five years of post-Series B data, which is crucial for defining and evaluating success.

Feature Engineering

We categorized the features into three broad groups to ensure a structured approach:

1. Temporal Features: Timing of key milestones, such as:

- Days between founding and the first funding round,

- Days between founding and Series A or Series B, and

- Days between successive funding rounds.

2. Funding Features: Metrics capturing funding history, such as:

- Total funding raised before Series B,

- Number of funding rounds, and

- Money raised during Series A.

3. Descriptive Features: Categorical and unstructured attributes, such as:

- Industry group,

- Headquarters region, and

- Startup descriptions (used for textual embeddings).This feature engineering ensures we capture the diverse trajectories startups take to reach Series B.

| Temporal Features |

|---|

| Days Between Founding and Earliest Funding Round |

| Days Between Founding and First Seed/Pre-Seed |

| Days Between Founding and Last Seed/Pre-Seed |

| Days Between Founding and Series A |

| Days Between Founding and Series B |

| Funding-Related Features |

|---|

| Earliest Funding Round – Type |

| Earliest Funding Round – Money Raised (in USD) |

| Series A Money Raised (in USD) |

| Total Money Raised Before Series B |

| Total Funding Rounds Before Series B |

| Number of Seed/Pre-Seed Rounds |

| Company Descriptive Features |

|---|

| Number of Founders |

| Industry Group (e.g., AI, Biotechnology) |

| Headquarters Region (e.g., Bay Area, Greater Boston Area) |

| Textual Description of Startup (used for embeddings) |

Predictive Models: What Worked and What Didn’t

To predict both binary and continuous success, we experimented with a range of machine learning models, focusing on both performance and interpretability.

Traditional Models

We started with models like:

- CART (Classification and Regression Trees),

- Random Forest,

- XGBoost,

- LightGBM, and

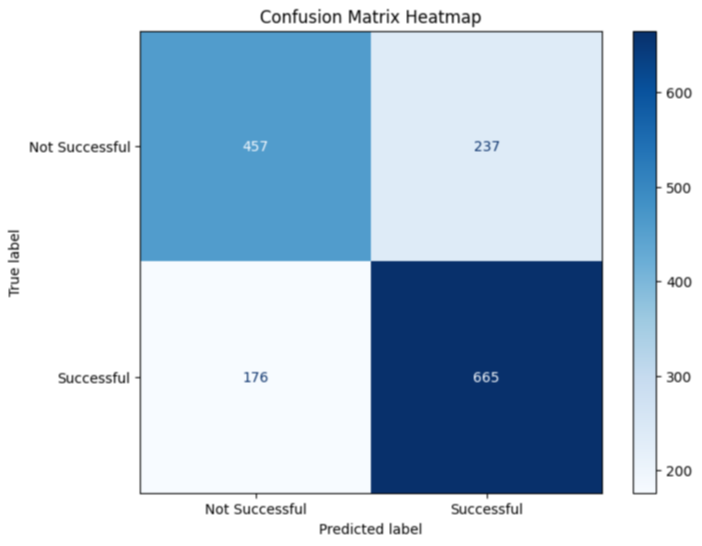

- CatBoost.These models performed well on numeric and categorical data. Among them, CatBoost emerged as the top performer for binary classification, achieving an AUC of 0.80. Its ability to handle categorical features natively contributed to its strong performance.

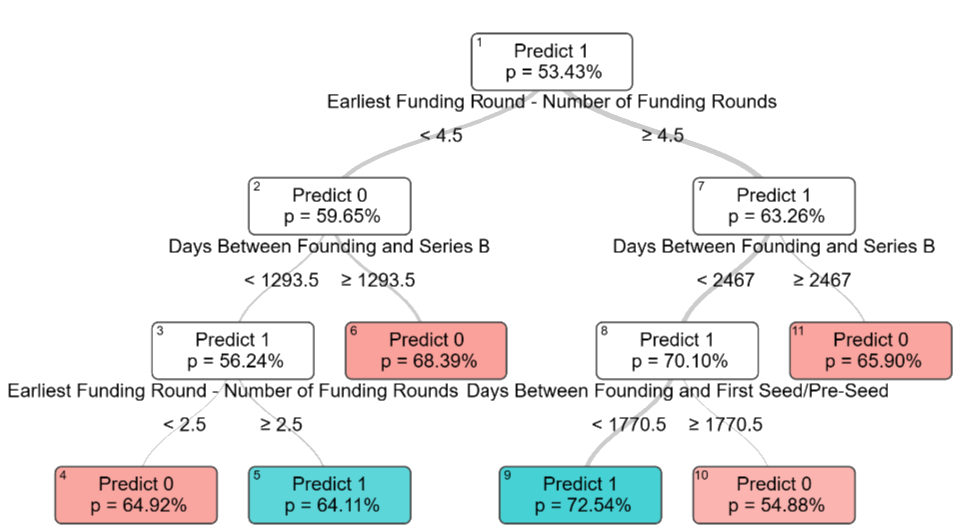

Optimal Trees

To balance performance with interpretability, we also used:

- Optimal Classification Trees (OCT) for binary success, and

- Optimal Regression Trees (ORT) for continuous success.These interpretable models revealed key insights into success predictors. For instance:

- The speed of early fundraising (e.g., days between founding and first seed round) emerged as a critical factor.

- The number of funding rounds before Series B was another strong predictor.While OCT and ORT underperformed slightly compared to boosting models, their interpretability made them valuable tools for understanding the underlying data.

Multi-Modal Techniques

We incorporated unstructured textual data (startup descriptions) using:

- BERT embeddings,

- Llama embeddings, and

- TabText, a method for transforming tabular data into textual representations.These embeddings were combined with structured data to create hybrid models. However, contrary to expectations, these multi-modal techniques did not outperform simpler models. The likely reason? Noise in the textual data and the relatively small dataset size for effective transfer learning.

| Model | Num. | Num. + Cat. | Num. + Cat.+ TabText | Num. + Cat.+ BERT | Num. + Cat.+ LLama | Num. + Cat.+ Transfer |

|---|---|---|---|---|---|---|

| CART | 0.61 | 0.66 | 0.61 | 0.61 | 0.61 | 0.63 |

| Random Forest | 0.75 | 0.78 | 0.73 | 0.72 | 0.72 | 0.76 |

| XGBoost | 0.75 | 0.79 | 0.77 | 0.75 | 0.75 | 0.77 |

| LightGBM | 0.76 | 0.79 | 0.78 | 0.77 | 0.76 | 0.79 |

| CatBoost | 0.78 | 0.80 | 0.79 | 0.78 | 0.77 | 0.80 |

| OCT | - | 0.70 | - | - | - | - |

Key Results and Insights

Our key takeaways from the predictive phase include:

1. Simplicity Often Wins: For this dataset, numeric and categorical features were sufficient for high performance. Advanced techniques like embeddings added noise rather than value.

2. Rapid Early Growth Predicts Success: Features like the time between founding and the first funding round are critical indicators of future outcomes.

3. Interpretable Models Add Value: While boosting models (e.g., CatBoost) offered the best performance, Optimal Trees provided actionable insights into the factors driving success.

What’s Next?

While predictions are a critical first step, the ultimate goal is to turn these predictions into actionable investment strategies. In our next post, we’ll explore:

- Portfolio optimization: Balancing risk and return using predictions.

- Robust investment strategies: Accounting for uncertainty in predictions to avoid over-concentrated portfolios.

- Prescriptive insights: Translating predictive analytics into real-world decision-making.By combining data-driven predictions with robust optimization techniques, we aim to provide a comprehensive framework for venture capital decision-making.Stay tuned for the next chapter in this journey!

Optimizing Compute Allocation for Large Language Models: A Data-Driven Approach

Large Language Models (LLMs) are the backbone of modern AI applications, powering everything from chatbots to advanced problem solvers. But deploying these models at scale comes with a critical challenge: how should compute resources be allocated between training and inference? The tradeoff is far from trivial—too much training and the model risks underperforming in real-world applications; too little and its foundational accuracy suffers.This blog post dives into the methods and insights behind my project, where we developed a robust framework to balance these competing demands using stochastic optimization. Along the way, we explore how uncertainty in user demand complicates this balance and how scenario modeling can help.

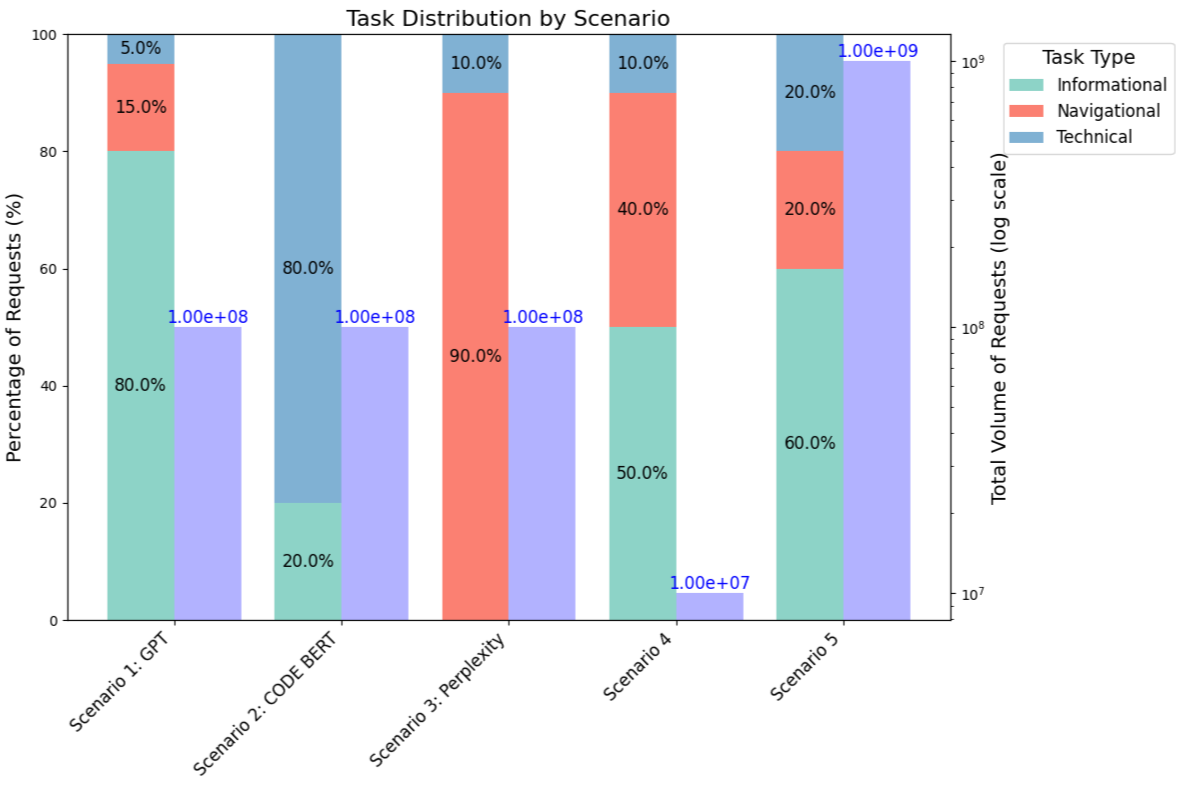

The Training-Inference Tradeoff: A Modern Challenge

Recent research has highlighted that inference-time compute can sometimes be a more effective lever for improving LLM performance than additional training. Techniques like chain-of-thought prompting and repeated sampling exploit inference-time resources to deliver higher accuracy, especially on complex tasks. This creates a tradeoff for companies providing LLM services: should they invest heavily in training upfront or reserve resources for enhancing inference quality?The stakes are high, especially in real-world deployments where user tasks vary in complexity and importance. To address this, we classified user tasks into three categories:1. Informational Tasks: General knowledge retrieval, where minor inaccuracies are tolerable.

2. Navigational Tasks: Finding or directing users to specific content, requiring higher precision.

3. Transactional Tasks: Complex operations like coding or problem-solving, which demand the highest accuracy.Each task type has unique accuracy requirements, further complicating resource allocation.

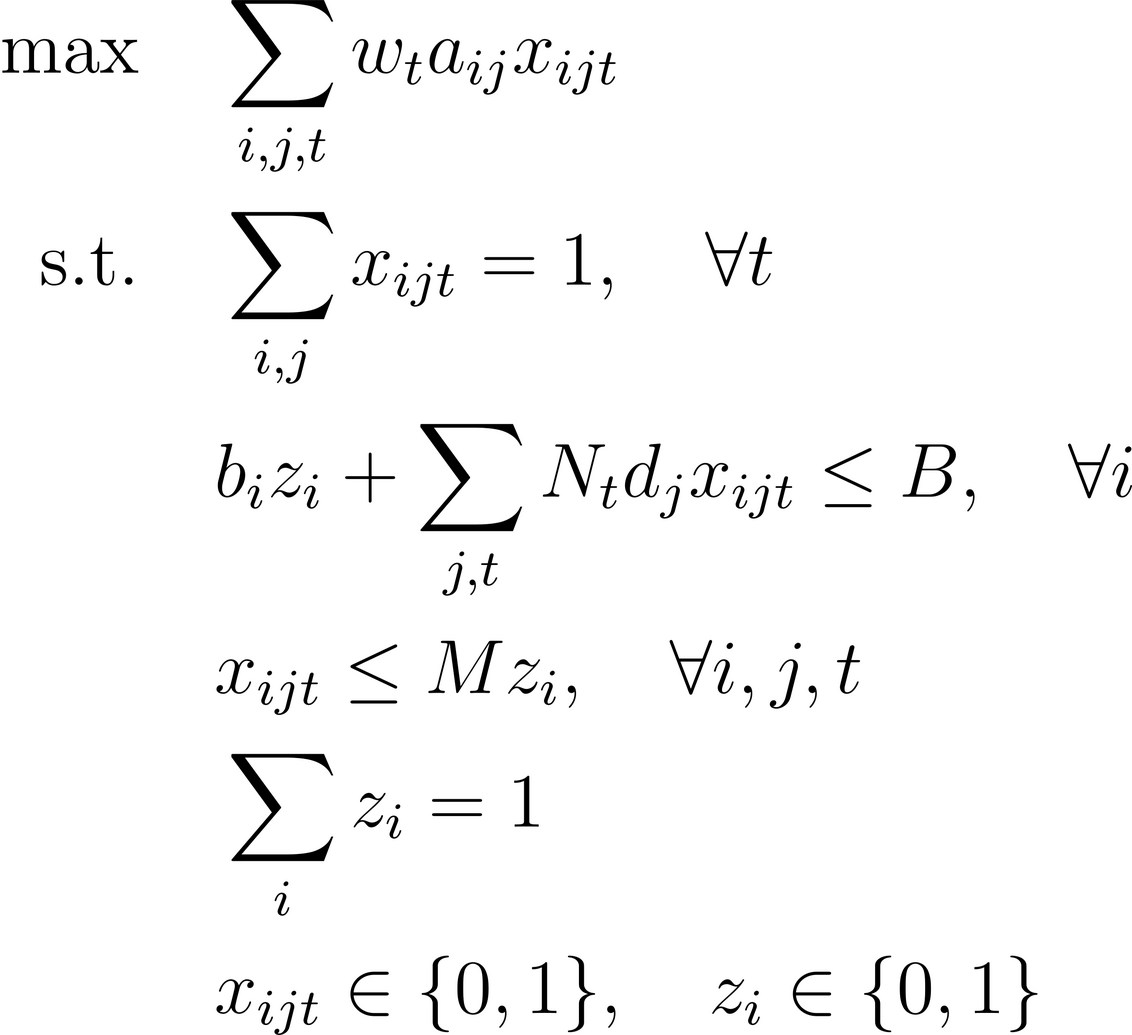

Mathematical Formulation: Allocating Compute Resources Optimally

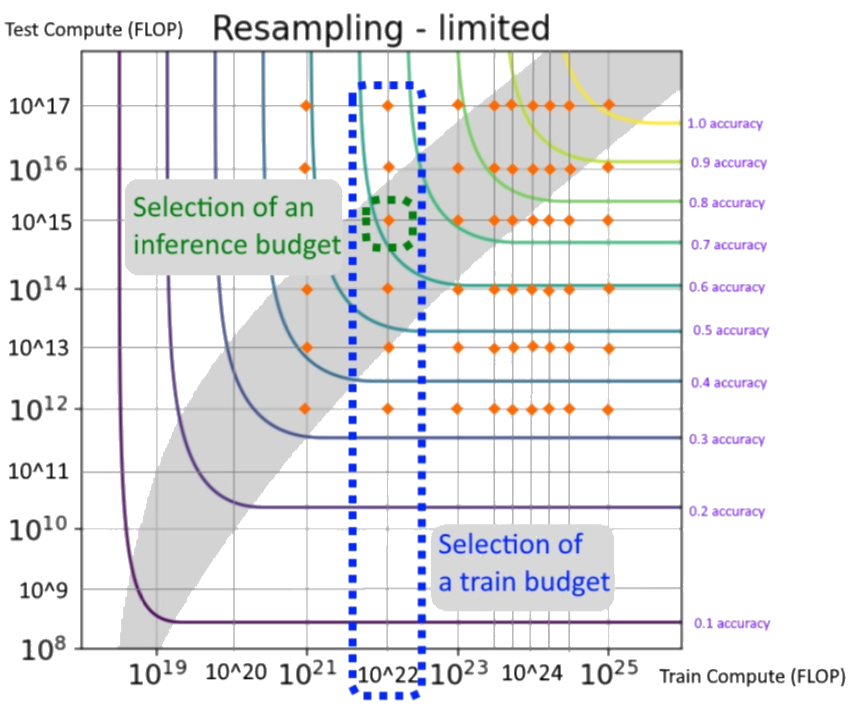

To balance training and inference resources effectively, we modeled the problem as an optimization framework. The goal is to maximize overall model accuracy by deciding how much compute to allocate to training and inference, while adhering to constraints such as the total compute budget.

Key Features of the Model:1. Non-Linear Accuracy Handling: The relationship between accuracy and compute allocation is non-linear. To address this, we sampled accuracy values over a grid of training and inference budget combinations, creating a piecewise-linear approximation. This allows the model to represent complex accuracy dynamics effectively.2. Objective Function: The equation's objective is to maximize accuracy across all tasks by selecting the best combination of training and inference budgets. Each task type is weighted based on its importance, ensuring critical tasks (e.g., transactional queries) receive higher priority.3. Decision Variables: The binary variables in the model dictate which combinations of training and inference budgets are selected. These variables ensure a single training budget is chosen, maintaining consistency across task types.4. Constraints: The first constraint ensures only one combination of training and inference budgets is selected per task type. The second enforces that the total compute used does not exceed the overall budget.

Additional constraints link training and inference choices and restrict the solution to one training budget.

Modeling Uncertainty with Scenarios

The biggest unknown in this tradeoff is N—the total number and distribution of tasks the model will face post-deployment. To tackle this, we created five scenarios representing different task distributions, ranging from knowledge-heavy workloads to high-volume, diverse use cases. By incorporating these scenarios into our optimization framework, we accounted for the inherent uncertainty in user behavior.

Methodology: Optimizing Across Scenarios

Our framework uses a Mixed-Integer Optimization (MIO) model with decision variables representing the allocation of compute resources between training and inference. Key components of the approach include:- Defining Constraints: Ensuring the total compute budget is respected.

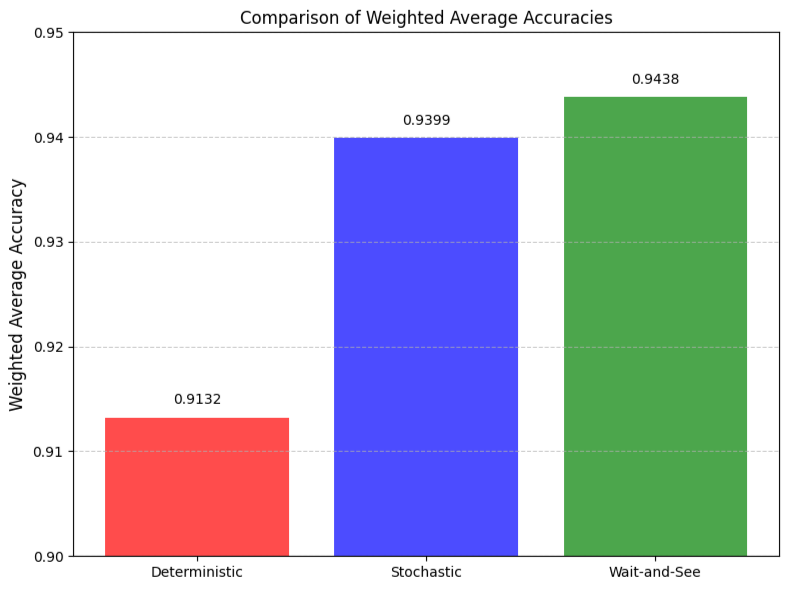

- Scenario-Based Optimization: Comparing three strategies for handling uncertainty:

1. Deterministic Optimization: Allocates resources based on average task loads across scenarios. Simple but inflexible.

2. Stochastic Optimization: Balances training and inference by optimizing for all scenarios simultaneously. This provides robustness to variability.

3. Wait-and-See Optimization: Assumes perfect foresight to maximize accuracy per scenario. While optimal, this is unrealistic in practice.By integrating iso-accuracy curves derived from scaling laws, we captured the non-linear relationship between training and inference budgets and their impact on model accuracy.

1. Deterministic Optimization: Simplicity with Limitations

Deterministic optimization involves allocating resources based on the average task load across all scenarios. While computationally straightforward, this approach is fundamentally limited by its inability to adapt to variability in task demands.Key Characteristics:

Fixed Training Budget: The training budget is determined based on the mean task distribution, ignoring potential variability.

Rigid Inference Allocation: This rigidity leads to inefficiencies, particularly in scenarios where task demands deviate significantly from the average.

2. Stochastic Optimization: Robustness Through Adaptability

Stochastic optimization is designed to account for uncertainty by jointly optimizing training and inference budgets across all possible scenarios. This method maximizes the expected accuracy by balancing trade-offs between accuracy and computational constraints.Key Characteristics:

Scenario-Based Flexibility: By considering multiple scenarios simultaneously, this framework ensures robustness to variability in task distributions.

Balanced Resource Allocation: Training budgets and inference resources are distributed to achieve the best average performance across all scenarios.

3. Wait-and-See Optimization: Theoretical Upper Bound

The wait-and-see framework assumes perfect foresight, enabling it to tailor resource allocation to each scenario. While this results in the highest possible accuracy for every scenario, its reliance on perfect information renders it impractical for real-world use.Key Characteristics:

Dynamic Adjustments: Training and inference budgets are adjusted individually for each scenario, ignoring the constraints of decision-making under uncertainty.

Impractical Assumptions: This method assumes that future task distributions are fully known before deployment, which is rarely feasible.

Key Takeaways

1. Stochastic Optimization Strikes the Best Balance: It achieved near-optimal accuracy (93.2%) while adapting effectively to different scenarios. This makes it the most practical choice for real-world deployments.

2. Deterministic Methods Underperform: Averages fail to capture the variability in task demands, leading to rigid and inefficient budget allocations.

3. Perfect Information Adds Marginal Value: The difference between stochastic and wait-and-see optimization (EVPI: 0.4%) shows that accounting for uncertainty robustly is almost as good as perfect foresight.

| Metric | Deterministic | Stochastic | Wait-and-See |

|---|---|---|---|

| Average Objective | 0.9132 | 0.9399 | 0.9438 |

| Train Budget (%) | 25.0% | 50.0% | 75.0% (Scenarios 1–4); 50.0% (Scenario 5) |

| Total Budget Used (%) (Scenario 1) | 53.0 | 78.0 | 95.8 |

| Total Budget Used (%) (Scenario 2) | 53.0 | 78.0 | 85.0 |

| Total Budget Used (%) (Scenario 3) | 44.0 | 69.0 | 94.0 |

| Total Budget Used (%) (Scenario 4) | 35.0 | 60.0 | 85.0 |

| Total Budget Used (%) (Scenario 5) | 71.0 | 96.0 | 96.0 |

Final Thoughts

This project highlights the value of stochastic optimization in managing the complexities of compute allocation for LLMs. It also underscores how scenario modeling can provide actionable insights despite the uncertainty of user demand. Future work could refine this approach by incorporating real-world deployment data or exploring multi-objective optimization that balances accuracy with response time.By addressing these challenges, we can ensure LLMs deliver consistent, high-quality performance—no matter the task.

From Prediction to Prescription: Designing Robust Startup Investment Strategies

In our previous post, we explored how machine learning can predict startup success using a hybrid definition of binary and continuous outcomes. We demonstrated that models like CatBoost, alongside feature engineering, could identify patterns in funding history and company attributes to forecast success with significant accuracy. However, making predictions is only part of the story. The ultimate challenge for venture capitalists lies in answering a critical question:

How do we translate these predictions into actionable and robust investment strategies?

In this post, we take the next step: using the predictions of startup success to design optimal portfolios that balance risk and reward. By incorporating robust optimization techniques, we go beyond simple prediction-based methods to develop investment strategies that account for uncertainty and ensure diversification.

The Challenge: Turning Predictions into Actions

The default approach to portfolio optimization is straightforward: invest in startups with the highest predicted returns. While intuitive, this point prediction strategy is inherently risky. Predictions, no matter how accurate, are subject to uncertainty, and overconfidence in any single investment can lead to disastrous outcomes.To mitigate these risks, we explore two alternative approaches:

1. Robust Optimization: Incorporates uncertainty into predictions to ensure diversification and guard against worst-case scenarios.

2. Weighted Average Prescriptions: Combines insights from both binary and continuous success predictions to make more balanced investment decisions.These approaches aim to provide stable, risk-adjusted returns, even when faced with noisy or imperfect predictions.

Our Methodology

Step 1: Using Predictions from Part 1

The predictions from our earlier work form the foundation of this phase. Specifically:

- Binary success probabilities (e.g., likelihood of IPO/acquisition) were obtained from models like CatBoost and Optimal Classification Trees (OCT).

- Continuous success estimates (e.g., valuation growth multiples) were generated using Optimal Regression Trees (ORT).These predictions served as inputs for our prescriptive optimization models.

Step 2: Portfolio Optimization Frameworks

We designed and compared three portfolio optimization strategies:

1. Point Prediction

The simplest approach uses the predicted valuation growth (continuous success) to select startups with the highest expected returns. Formally, this involves maximizing the sum of predicted returns under a budget constraint:

Where:

- ri is the predicted valuation growth for startup i,

- zi is the allocation to startup i, and

- B is the total investment budget.While straightforward, this method assumes perfect confidence in the predictions and often results in overly concentrated portfolios.

2. Robust Point Prediction

To address the risks of overconfidence, we introduced a robust optimization framework. This approach accounts for prediction uncertainty by allowing up to Gamma % of success labels to be adversarially flipped. This forces the optimization to diversify investments across startups, reducing reliance on any single prediction.The robust formulation is as follows:

Where Delta y_i represents adversarial changes to the predicted success labels, constrained by an uncertainty budget Gamma.This method penalizes concentrated portfolios and ensures that even in a worst-case scenario, the returns remain stable.

3. Weighted Average Prescriptions

This approach leverages both binary and continuous predictions by combining them into a weighted average. Startups are grouped into "prediction neighborhoods" defined by the leaves of the Optimal Classification and Regression Trees (OCT and ORT). The optimization problem is then written as:

Where:

- L1(i) and L2(i) represent the classification and regression tree predictions for startup i,

- yu is the binary success probability for a training sample u, and

- ru is the continuous success prediction for u.This approach balances the trade-off between binary likelihood and continuous returns, offering a middle ground between the other two methods.

Step 3: Evaluation

To evaluate the performance of each strategy, we simulated investments over the five years following Series B funding. Key metrics included:

1. Median Return: Reflects the typical performance across portfolios.

2. Risk-Adjusted Return: Measures the variability of returns, calculated as the mean return divided by the standard deviation.We tested the strategies on portfolios of varying sizes (10, 25, 50, and 100 startups) and observed how they performed under different levels of uncertainty.

Results: What Did We Learn?

The results highlighted the strengths and weaknesses of each strategy:1. Point Prediction:

- Strength: Highest median returns in small portfolios (e.g., 10 startups).

- Weakness: Extremely concentrated portfolios, leading to high risk.2. Robust Point Prediction:

- Strength: Best risk-adjusted returns across all portfolio sizes.

- Weakness: Lower median returns compared to Weighted Average in small portfolios.3. Weighted Average:

- Strength: Balanced approach with strong performance in medium-sized portfolios (e.g., 25-50 startups).

- Weakness: Slightly lower risk-adjusted returns compared to Robust Point Prediction.

Key Takeaways

1. Diversification Is Critical: Over-concentrated portfolios are risky, especially in uncertain environments. Robust optimization effectively enforces diversification.

2. Hybrid Approaches Offer Flexibility: Weighted Average prescriptions combine the best of both worlds, balancing binary success probabilities with continuous returns.

3. Practical Constraints Matter: Real-world scenarios often require additional constraints, such as limits on sector-specific allocations or maximum exposure to a single startup. Future iterations can incorporate these constraints to enhance applicability.

Conclusion

This two-step framework—prediction followed by prescription—bridges the gap between machine learning insights and actionable investment strategies. While predictive models identify promising startups, robust and weighted optimization methods ensure that portfolios are both profitable and resilient.In the next phase of our work, we plan to explore sector-specific diversification strategies and dynamic allocation models to further refine the robustness of these methods. With this comprehensive approach, we aim to provide venture capitalists with a practical, data-driven toolkit for navigating the high-stakes world of startup investments.

Learning to Swing: Teaching AI to Improvise in Jazz

Can AI Improvise Like a Jazz Musician?

Jazz improvisation is a deeply creative and nuanced art form, often posing significant challenges for learners. How can AI contribute to this domain? Can it help aspiring musicians discover improvisational patterns from masters like John Coltrane or Charlie Parker? These were the questions we sought to answer through JazzBot, an AI-powered web app designed to generate jazz solos based on user input, combining cutting-edge machine learning techniques with the rich tradition of jazz improvisation.In this post, we’ll dive into the technical journey of creating JazzBot, from encoding music data to training our AI models, and the invaluable lessons we learned throughout this process.

Why Jazz? Motivations and Challenges

Improvisation is the soul of jazz, where musicians create spontaneous solos within specific rhythmic and tonal frameworks. However, mastering this skill independently can be daunting due to the complexity of jazz theory. We aimed to bridge this gap with JazzBot, an AI application that generates jazz solos by learning from the greats, providing aspiring musicians with an educational and creative tool to study improvisation patterns and incorporate them into their own playing.

Representing Music for Machine Learning

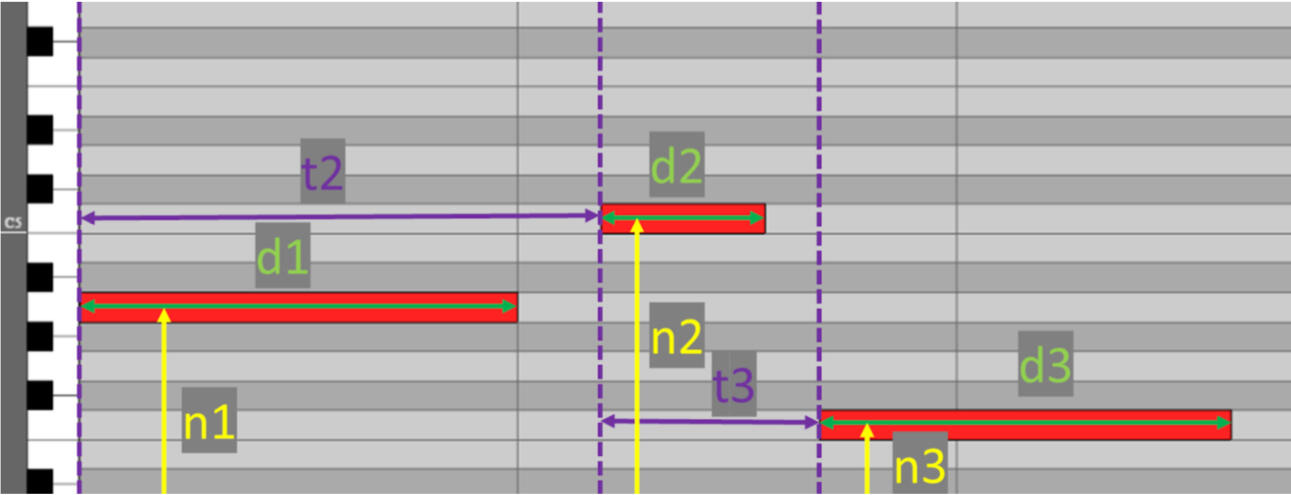

To ensure that our AI could interpret and generate music effectively, we carefully selected a representation format. Instead of using raw audio (e.g., WAV files), which is computationally expensive, we opted for MIDI files. These files provide a structured yet compact way to encode musical information, such as pitch, duration, and dynamics.

Pitch (n): Represents the note's frequency.

Duration (d): The length of time the note is held.

Time Offset (t): The temporal gap between two consecutive notes.

Velocity (v): Indicates the intensity or "attack" of the note.

Discretizing these parameters resulted in a vocabulary of 544 symbols, capturing the essence of musical patterns while remaining computationally efficient. For example, we used a time offset granularity of 1/12 of a beat, ensuring compatibility with the intricate rhythms of jazz.

In order to manipulate and produce new MIDI files easily, we constructed a web application with a piano roll interface that allows the user to construct a sequence of piano notes with different pitches and durations. This representation of music is simnple enough to be perfectly converted in a n-d-t-v format according to the definitions given above.

Datasets: Learning from the Masters

Our training relied on two primary datasets:1. Weimar Jazz Database (WJazzD): A curated collection of over 200,000 annotated notes from jazz legends like Miles Davis and Charlie Parker.

2. GiantMIDI-Piano Dataset: Featuring 38.7 million notes from classical piano recordings, this dataset was used for pre-training our model.

The model was first pre-trained on the classical dataset to understand general musical patterns and later fine-tuned on WJazzD to specialize in jazz improvisation.

The Model: Leveraging Transformer Architecture

Transformers, originally designed for natural language processing, are particularly well-suited for music generation due to their ability to capture long-term dependencies. We implemented a custom transformer-based model, inspired by architectures like OpenAI’s MuseNet and Gaëtan Hadjeres' Piano Inpainting Application.

Key Features of Our Model

1. Multi-Output Linear Layers: To maintain the correct sequence of attributes (n, d, t, v), we incorporated four specialized output layers. Each layer was trained to predict a specific attribute, ensuring coherent and musically meaningful outputs.

2. Deterministic Constraints: To further ensure that generated sequences adhered to the expected attribute order, we implemented constraints in the model architecture. This approach also reduced the number of trainable parameters, improving computational efficiency.

Training and Optimization

The training process involved two stages:1. Pre-Training: Using the GiantMIDI dataset to initialize the model's parameters.

2. Fine-Tuning: Focusing on WJazzD to refine the model’s understanding of jazz-specific improvisational patterns.

We employed advanced techniques such as stochastic gradient descent for optimization and Ray Tune for hyperparameter tuning, ensuring robust and efficient training. The final model was trained using parallel processing across multiple GPUs, leveraging PyTorch and Lightning frameworks.

Generating Music: The JazzBot Web App

Once trained, JazzBot was able to generate jazz solos by extending a user-provided melody. Users could control the "randomness" of the output through a temperature parameter, which influenced the creativity of the generated sequence. Low temperatures produced predictable but coherent solos, while high temperatures led to more adventurous, innovative outputs.To make our model accessible, we deployed it as a web app using Streamlit. The app allowed users to input melodies and experiment with generated solos, fostering a collaborative and educational experience. We even hosted a competition among our peers to showcase JazzBot’s capabilities and gather feedback.

The video above showcases an example of a generated improvisation based on the opening notes of a solo by Benny Carter. While the generated segment retains similar pitch ranges and rhythmic durations to the original, the melodic quality is notably less appealing compared to the authentic extract.The model also learned to adapt its start time tokens t and duration tokens d so that notes don't overlap but appear in a sequence without silences, just like in Benny Carter's piece

Evaluating the Model: Statistical and Musical Analysis

Statistical Validation

We analyzed the distributions of generated notes (pitch, duration, time offset, and velocity) and found them to closely match the training data. This alignment demonstrated the model's ability to learn and reproduce the statistical properties of jazz improvisation.

Musical Coherence

Musically, the generated solos adhered to jazz tonal and rhythmic patterns, although they occasionally veered into dissonance over extended sequences. The issue of repetition in the generation has been observed in natural language Transformers when the training set is too small, which is probably the main problem we are facing here. These insights highlight the model's potential while pointing to areas for improvement, such as penalizing repetitive structures in the loss function.

Lessons Learned and Future Directions

Our journey underscored the importance of interdisciplinary approaches in AI:1. Music Representation: Choosing the right encoding format is crucial for balancing data fidelity and computational efficiency.

2. Model Design: Customizing transformer architectures for domain-specific tasks can significantly improve performance.

3. Feedback Loops: Real-world deployment and user interactions provide invaluable insights for iterative improvement.

Looking ahead, we aim to explore more advanced architectures like MusicLM and refine our model to better handle longer musical sequences. Beyond jazz, the techniques developed in this project could be adapted to other domains, such as protein sequence generation or educational tools for other art forms.